Apple continues to explore how generative AI can improve app development pipelines. Here’s what they’re looking at.

A bit of background

A few months ago, a team of Apple researchers published an interesting study on training AI to generate functional UI code.

Rather than design quality, the study focused on making sure the AI-generated code actually compiled and roughly matched the user’s prompt in terms of what the interface should do and look like.

The result was UICoder, a family of open-source models which you can read more about here.

The new study

Now, a part of the team responsible for UICoder has released a new paper titled “Improving User Interface Generation Models from Designer Feedback.”

In it, the researchers explain that existing Reinforcement Learning from Human Feedback (RLHF) methods aren’t the best methods to train LLMs to reliably generate well-designed UIs, since they “are not well-aligned with designers’ workflows and ignore the rich rationale used to critique and improve UI designs.”

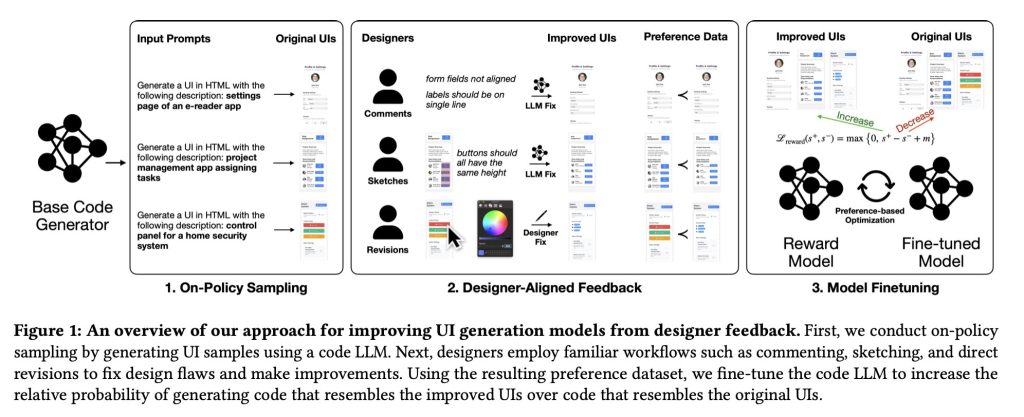

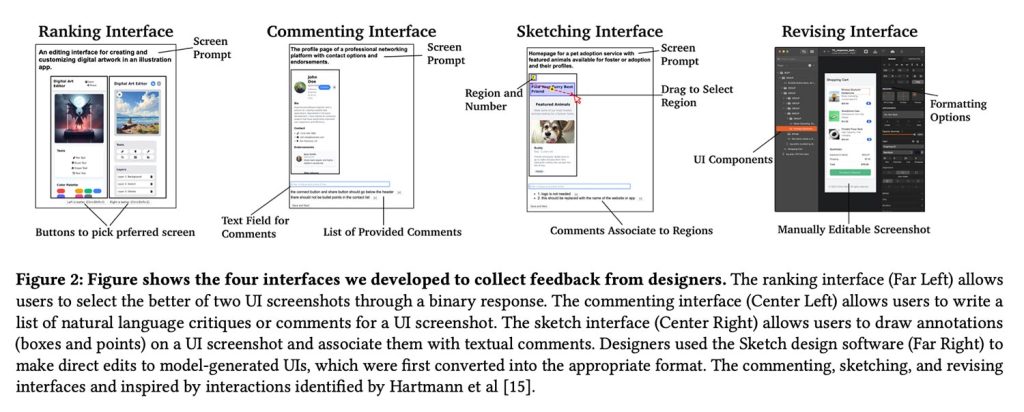

To tackle this problem, they proposed a different route. They had professional designers directly critique and improve model-generated UIs using comments, sketches, and even hands-on edits, then converted those before-and-after changes into data used to fine-tune the model.

This allowed them to train a reward model on concrete design improvements, effectively teaching the UI generator to prefer layouts and components that better reflected real-world design judgment.

The setup

In total, 21 designers participated in the study:

The recruited participants had varying levels of professional design experience, ranging from 2 to over 30 years. Participants also worked in different areas of design, such as UI/UX design, product design, and service design. Participating designers also noted the frequency of conducting design reviews (both formal and informal) in job activities: ranging from once every few months to multiple times a week.

The researchers collected 1,460 annotations, which were then converted into paired UI “preference” examples, contrasting the original model-generated interface with the designers’ improved versions.

This, in turn, was used to train a reward model for fine-tuning the UI generator:

The reward model accepts i) a rendered image (a UI screenshot) and ii) a natural language description (a target description of the UI). These two inputs are fed into the model to produce a numerical score (reward), which is calibrated so that better-quality visual designs result in larger scores. To assign rewards to HTML code, we used the automated rendering pipeline described in Section 4.1 to first render code into screenshots using browser automation software.

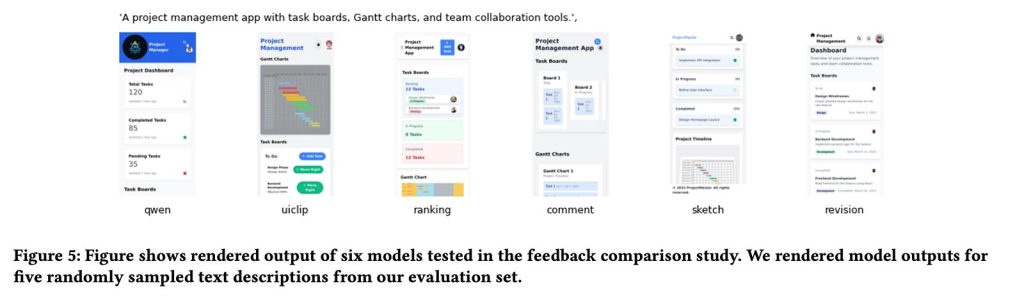

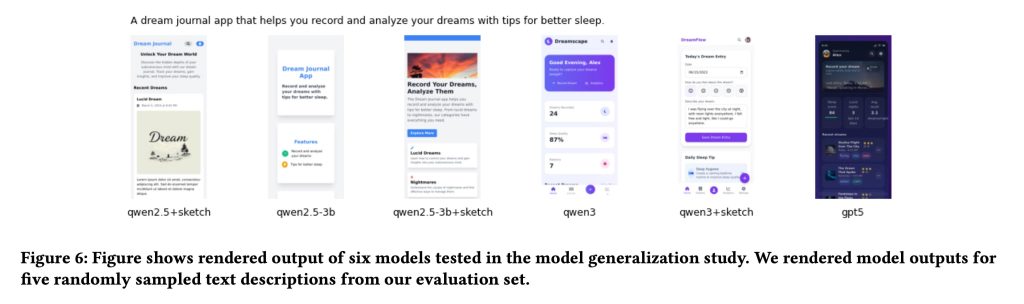

As for the generator models, Apple used Qwen2.5-Coder as the primary base model for UI generation, and later applied the same designer-trained reward model to smaller and newer Qwen variants to test how well the approach generalized across different model sizes and versions.

Interestingly, as the study’s own authors note, that framework ends up looking a lot like a traditional RLHF pipeline. The difference, they argue, is that the learning signal comes from designer-native workflows (comments, sketches, and hands-on revisions) rather than as thumbs-up/down or simple ranking data.

The results

So, did it actually work? According to the researchers, the answer is yes, with important caveats.

In general, models trained on designer-native feedback (especially with sketches and direct revisions) produced noticeably higher-quality UI designs than both the base models and versions trained using only conventional ranking or rating data.

In fact, the researchers noted that their best-performing model (Qwen3-Coder fine-tuned with sketch feedback) outperformed GPT-5. Perhaps more impressively, this was ultimately derived from just 181 sketch annotations from designers.

Our results show that fine-tuning with our sketch-based reward model consistently led to improvements in UI generation capabilities for all tested baselines, suggesting generalizability. We also show that a small amount of high-quality expert feedback can efficiently enable smaller models to outperform larger proprietary LLMs in UI generation.

As for the caveat, the researchers noted that subjectivity plays a big part when it comes to what, exactly, constitutes a good interface:

One major challenge of our work and other human-centered problems is handling subjectivity and multiple resolutions of design problems. Both phenomena can also lead to high variance in responses, which poses challenges for widely-used ranking feedback mechanisms.

In the study, this variance manifested as disagreement over which designs were actually better. When researchers independently evaluated the same UI pairs that designers had ranked, they only agreed with the designers’ choices 49.2% of the time, barely a coin flip.

On the other hand, when designers provided feedback by sketching improvements or directly editing the UIs, the research team agreed with those improvements much more often: 63.6% for sketches, and 76.1% for direct edits.

In other words, when designers could show specifically what they wanted to change rather than just picking between two options, It was easier to agree on what “better” actually meant.

For a deeper look into the study, including more technical aspects, training material, and more examples of the interfaces, follow this link.

Accessory deals on Amazon

- AirPods Pro 3

- Apple AirTag 4 Pack

- Beats USB-C to USB-C Woven Short Cable

- Wireless CarPlay adapter

- Logitech MX Master 4

FTC: We use income earning auto affiliate links. More.

Comments