Smartphone cameras get better every year, but compact sensors still have many limitations, especially when it comes to photos in the dark and zooming. Samsung was one of the first to add a periscope lens to its smartphones for better zoom. With this lens, users can even take pictures of the moon with their phone. But this has become a controversy as some users have questioned whether these photos are in fact real. And I say they are.

Modern smartphone cameras and AI

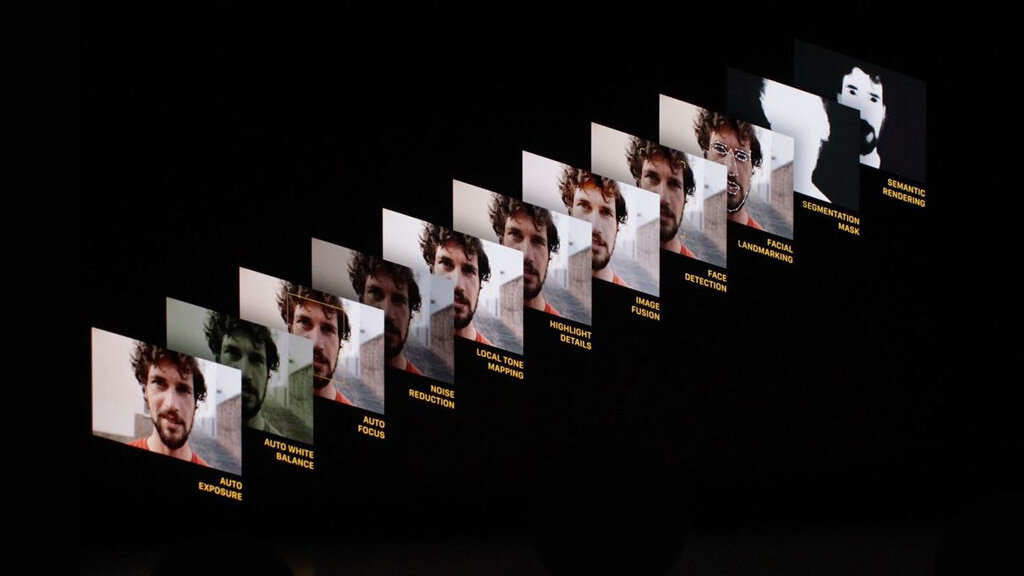

It’s now impossible to talk about smartphone cameras without mentioning improvements made by artificial intelligence. Pretty much every phone manufacturer from Apple to Google to Samsung uses AI to improve the pictures taken by users. Such technology can mitigate the lack of a large camera sensor in many cases.

For example, Apple introduced Deep Fusion and Night Mode with iPhone 11. Both technologies combine the best parts of multiple images with AI to result in a better photo when there’s little or no light. iPhone and other smartphones also use AI to make the sky bluer, the grass greener, and food more appealing.

Some phones do this more aggressively, others less so. But the fact is, almost all of your photos taken with smartphones in the last few years have some kind of modification done by AI. You’re not actually capturing what you’re seeing with your eyes, but what your smartphone thinks will look best in a digital photo.

Pictures of the moon taken with the Galaxy S23 Ultra

Capturing photos of the moon is quite challenging, since it’s a super distant luminous subject. Focusing on it is not easy, and you still need to set the right exposure to get all the details. It’s even more challenging for a smartphone camera.

You can take pictures of the moon with your iPhone if you use a camera app with manual controls, but they still won’t look good because of the distance and the lack of a wider optical zoom.

One of the key features of the Samsung Galaxy S Ultra phones is the periscope camera, which enables up to 10x optical zoom. Combined with software tricks, users can take pictures with up to 100x zoom using these phones. iPhone is rumored to gain a periscope lens in the next generation, but for now, it only offers a telephoto lens with 3x optical zoom (if you have a Pro model).

With such a powerful zoom, Samsung promotes the camera of the Galaxy S23 Ultra, its latest flagship device, as capable of taking pictures of the moon. And it indeed is.

Last week, a user on Reddit conducted an experiment to find out whether or not the moon photos taken with the S23 Ultra are real. The user basically downloaded a picture of the moon from the internet, reduced its resolution to remove details, and pointed the phone at the monitor showing the blurry picture of the moon. Surprisingly, the phone took a high-quality picture of the moon.

The internet was immediately flooded with people and news sites claiming that Samsung’s phones take fake pictures of the moon. Was Samsung lying this whole time? Not really.

Here’s how Samsung’s phones take pictures of the moon

Contrary to what some people think, Samsung is not replacing the photos taken by users with random, high-quality moon shots. Huawei’s phones, for example, actually do this. Instead of using AI, the system uses pre-existing images of the moon to make the final photo. Samsung, on the other hand, is using a lot of AI to deliver good moon shots.

Samsung has an article on its Korean website detailing how the camera algorithms work in its smartphones. According to the company, all phones since Galaxy S10 use AI to enhance photos.

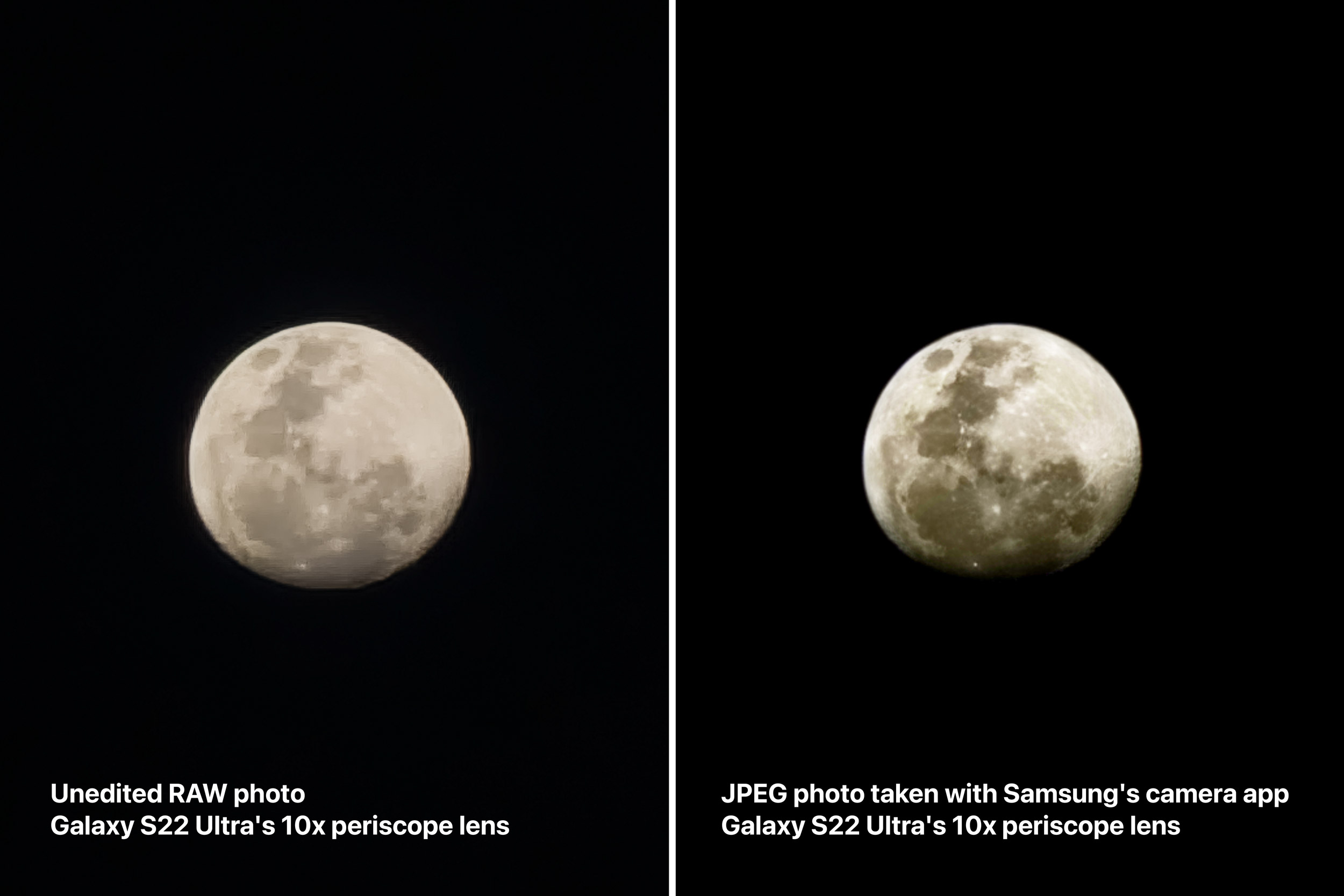

For phones with a periscope lens, Samsung uses a “Super Resolution” feature that synthesizes details that were not captured by the sensor. In this case, when Samsung’s phones detect the moon, they immediately use AI to increase the contrast and sharpness of the photo, as well as artificially increasing the resolution of the details in it.

This feature works pretty much the same way as Pixelmator’s ML Super Resolution and Halide’s Neural Telephoto. You’re not exactly replacing your photo with another one, but rather using a bunch of algorithms to reconstruct it in a better quality.

I did the test myself with a Galaxy S22 Ultra, which also has a periscope lens. I used a third-party camera app to take a RAW picture of the moon with 10x zoom. Then I took a photo in the same position, but using Samsung’s camera app. If I overlay both images, I can clearly see that they’re the same. But the processed version has a lot more details that were added by AI to make the photo prettier.

What’s a real photo and a fake one?

I wrote this article after watching MKBHD’s latest video in which he questions, “what is a photo?” As mentioned before, all smartphones today – even the iPhone – use algorithms to enhance images. Sometimes this works very well, sometimes not.

Top comment by Jeremy Somerville

The author here misunderstands how AI ML models work — which is understandable.

The TLDR is:

AI models can be trained to do a lot of different things

The Reddit post demonstrates what the phone is doing

The AI model is effectively superimposing many different moon textures on top (from its statistical training data)

What’s the difference between this and other AI super resolution models? Not much, except the degree to which this has been taken. If the phone takes a photo of a blurry mess, and it comes out sharp, then it is not sharpening the photo, it is adding data. In this case from an ML model designed specifically to blow moon photos up like 50x. It’s getting that information from its training data (other high resolution moon photos)

I recently wrote about how Smart HDR has been making my photos look too sharp and with exaggerated colors, which I don’t like. Since iPhone XS, a lot of users have also complained about how Apple has been smoothing people’s faces in photos. Are the photos taken with my iPhone fake? I don’t think so. But they certainly don’t look 100% natural either.

Samsung has done something really impressive by combining its software with the periscope lens. And if the company is not replacing users’ images with alternative ones, I can’t see why this is a bad thing. Most users just want to take good pictures, no matter what.

I’m sure that if Apple eventually introduces a feature that uses AI to make moon shots better, a lot of people will love it without question. And as said by MKBHD, if we start questioning the AI used to enhance a photo of the moon, then we’ll have to question every photo enhancement done by AI.

Read also:

- MKBHD claims that post-processing is ruining iPhone photos – and I agree with that

- Samsung’s moon photos aren’t completely fake, but this test shows how aggressive the AI is

FTC: We use income earning auto affiliate links. More.

Comments