Scientific tests of Vision Pro latency show that Apple’s spatial computer lives up to Apple’s claims of superiority over rival headsets – but only in the first of two metrics.

This means that the passthrough feature, which lets you see the real world around you, is better than any of its rivals in one way, but is very slightly (and imperceptibly) worse than Meta in another …

Passthrough, and the two types of latency

Vision Pro, like other VR headsets, completely cuts you off from the real world. The workaround for this is passthrough. Multiple cameras on the outside of the device feed video to on-board processing units, which combine them into a single image.

That video-processing task takes a non-zero length of time, which means you’re not quite looking at the world in real-time. Instead, there’s a small lag between something happening and you seeing it, which is known as the see-through latency.

Similarly, when you turn your head to the left or right, there is a lag between the movement of your head and the display being updated to show the new viewpoint. That’s known as the angular latency.

Vision Pro latency: See-though

Apple says that Vision Pro latency is 12ms, by which it means the see-through latency (sometimes referred to as photon-to-photon: the time between a photon in the real world hitting a camera and a photon from the display hitting your eyeballs).

Optofidelity, a company specialising in this type of benchmarking, put that claim to the test. It actually found that the latency was marginally lower (better) than Apple claimed, at ~11ms.

This is much better than any of the three rivals tested. The HTC VIVE XR Elite, Meta Quest 3, and Meta Quest Pro all had see-through latency in the 35-40ms range.

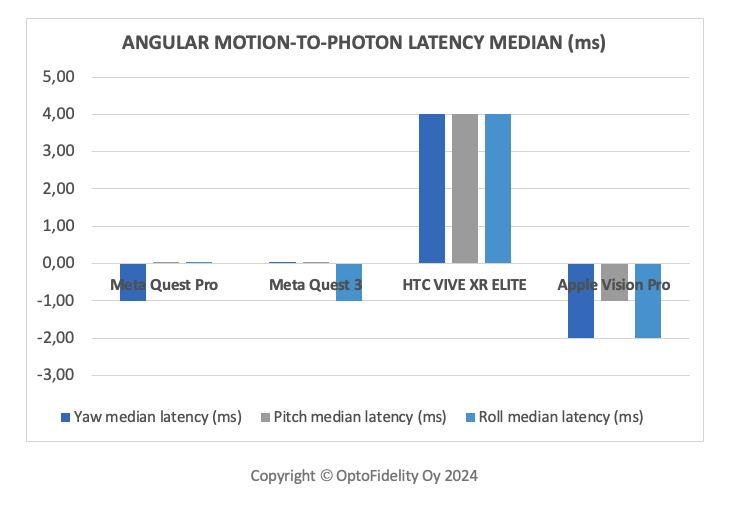

Vision Pro latency: Angular motion

Angular motion latency gets a little more interesting, and that’s because three of the four headsets tested use predictive algorithms to overcome the latency.

Obviously there’s no way for a headset to predict that is happening in front of it, so there’s no workaround for see-though latency. But when you turn your head, motion detectors know how quickly you are turning it, and can use that information to cheat.

Let’s say you turn your head to the left at a constant speed. The cameras on the left side of the headset see the new viewpoint before the centre of your eyes reach it, so they can use that feed to shift the displayed image so that it shows what your eyes would see by the time you reached that angle.

This predictive approach means that angular latency can in theory be zero – if the predicted motion is 100% accurate. If you move your head faster than expected, then you again get lag. But if you move your head slower than expected, the image you see can actually get ahead of the real-time view.

For this reason, measurements of angular motion latency can be negative, and that’s what Optofidelity found here for three of the headsets.

HTC’s Vive seemingly doesn’t use prediction, so its latency remained unchanged. But all three of the others use the predictive approach, and all three actually get very slightly ahead of the real-world angle, resulting in negative latency values.

Obviously the optimal latency value is zero, so Vision Pro performed slightly worse than the Meta headsets. However, Optofidelity said that all three latency figures were too low to be detectable in real-life use, so effectively all three have no angular latency. The company also notes that, as this is a software function, Apple should be able to fine-tune its performance here.

Photo by Mylo Kaye on Unsplash

FTC: We use income earning auto affiliate links. More.

Comments