The first developer beta of the first Apple Intelligence features launched this week, which includes a few early Siri improvements – together with an extremely pretty new interface. Although I’m in the UK, I was able to use this approach to get access.

Since Apple will be calling all the new features a beta when it launches, what we are currently able to test is effectively the very first beta of a beta product – so expectations should be set accordingly …

What we will be getting at some point – perhaps by the end of the year – is a whole new Siri, with dramatically improved capabilities. But that’s a long way off yet.

What the first developer beta provides is just the very first step in making Siri smarter. Here’s what is officially included:

- A new (and exceedingly pretty!) user interface

- The freedom to switch between voice and text

- The ability to handle Apple support questions

- Coping with verbal stumbles

- Maintaining contextual understanding for follow-up queries

Taking each in turn …

The new UI – pretty, but confusing

First, I absolutely love the new UI. It’s honestly one of the most beautiful pieces of software design I’ve seen in a long time.

If you access and hold the side button, the animation even begins from the button position and spreads out from there. It’s gorgeous.

However … It’s also confusing. We’re seeing a brand new animation for what is, currently, mostly the old Siri. Effectively the UI change appears to signal a big change, but only delivers a small one. It would be far better, in my view, for the new UI to wait for the full capabilities of the new Siri.

Switching between voice and text

I work from home, and live in London, where nobody will take the slightest bit of notice of what you’re saying to your phone in public, so for me personally I’m almost always happy to voice Siri requests.

All the same, I think it’s fantastic to give people the choice, and the double-tap gesture at the bottom of the screen is a really easy way to access it. This is a great piece of UI design.

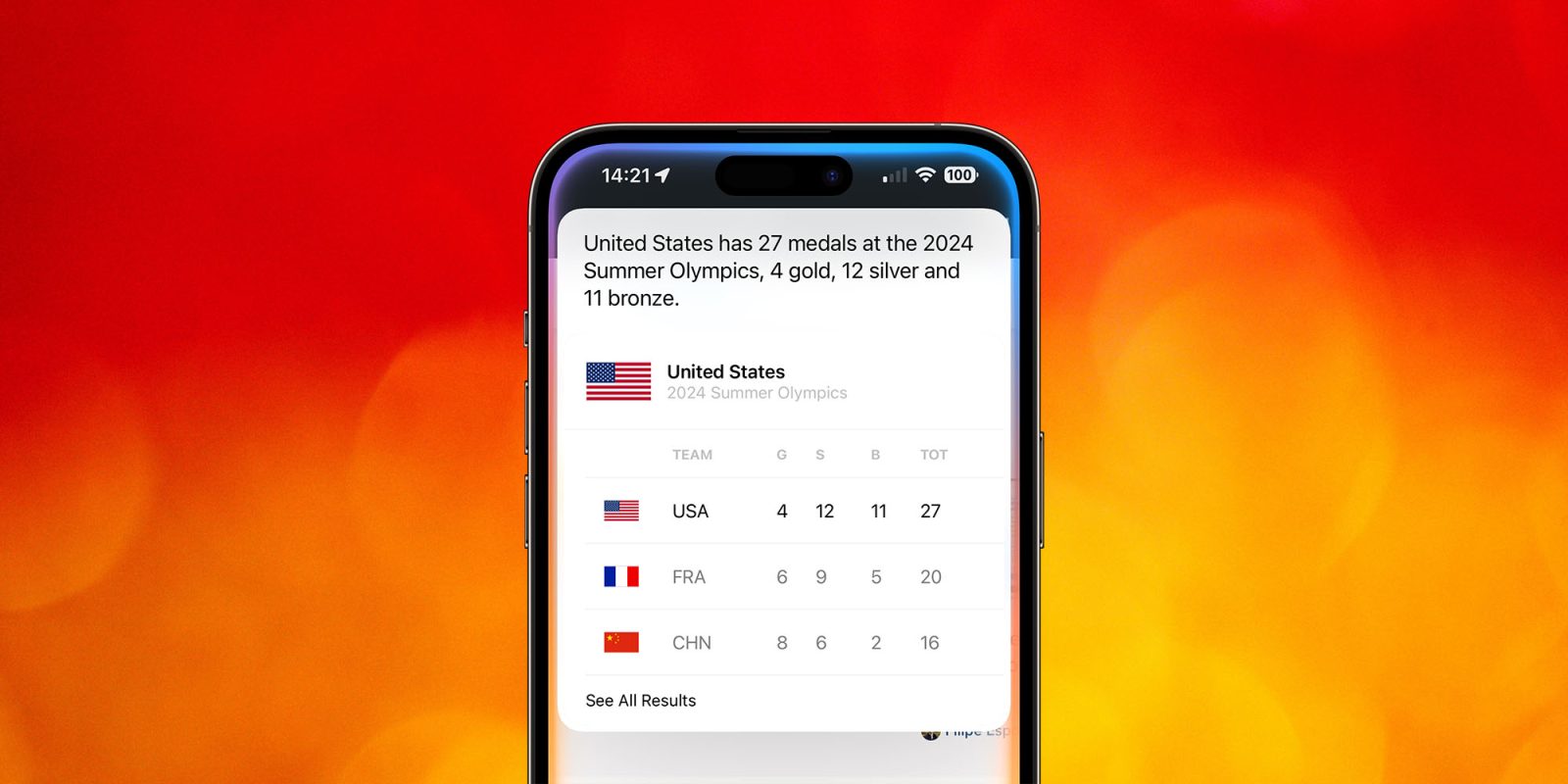

Handling Apple support questions

Right now, this is the single most impressive feature. I’ve tested it on a whole bunch of queries, and it gave great answers for all of them.

For example:

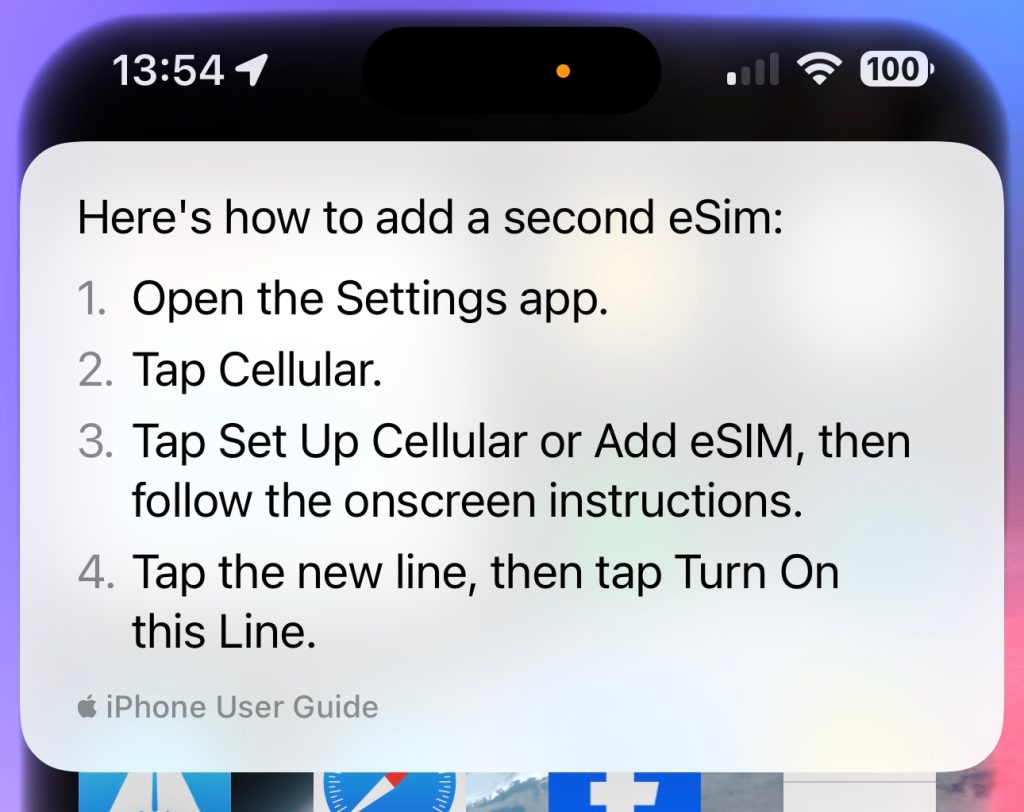

Siri, how do I add an e-SIM to my iPhone?

Siri responded with a great summary, which would be perfectly adequate for anyone to follow:

But if more info is needed, simply tap anywhere in the answer and it opens up the appropriate support document.

Incidentally, this is another reason for Apple to stick to US English for now. Since I had to change my iPhone’s region to the US, and language to US English, then the setting is indeed in Cellular – but for a UK iPhone it would normally be Mobile Data. So there are a lot of moving parts in making Apple Intelligence work worldwide.

Coping with verbal stumbles

This, too, is really impressive. I’ve tried changing my mind about what I’m asking in mid-query, and Siri handles it without blinking. For example:

What time is it in New York– No, Chicago– No, I mean San Francisco?

Siri merely metaphorically rolled her eyes and told me the time in San Francisco.

Maintaining contextual understanding

This, sadly, is where things fall down – and that’s mostly because Siri isn’t fundamentally any smarter, so all the “Here’s what I found on the web” responses still happen. For example:

Me: What is Apple Intelligence?

Siri: You can find details on all Apple products at apple.com

Sometimes it does well. For example, this interaction:

Me: What time is it in Mumbai?

Siri: It’s 18:37 in Mumbai, India

Me: And what’s the weather there?

Siri: It’s currently raining and 28C in Mumbai, India

(I should note that after setting the US as my region, I manually switched time back to 24H and temperature back to celsius.)

However, context only applies to the current Siri session – that is, as long as the animation is on-screen. Once that times out, which happens after about six seconds, then it forgets the context. So waiting 10 seconds and then asking “And what’s the weather there?” reverted to my local weather, despite the highlighted verbal cues that I’m still referring to Mumbai.

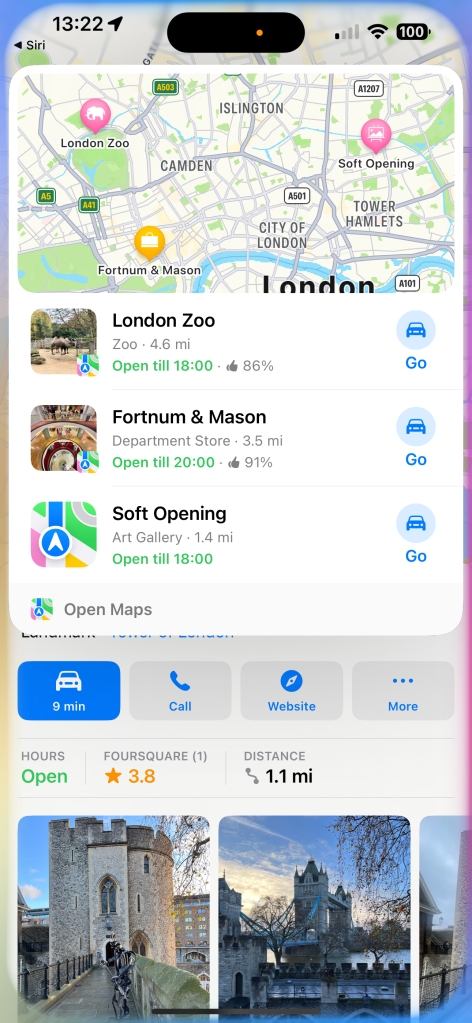

For most queries, however, Siri is no smarter. For example, this interaction:

Me: How long will it take me to get to the Tower of London?

Siri: Which one? <Shows list of places with Tower in the name>

Me: <Taps Tower of London>

Siri: <Shows it in Maps>

Me: And what are the opening hours?

Siri:

So yeah, don’t expect too much!

But, like I say, this is the very first beta version of a beta product, so all this is to be expected. What will be interesting to see is the pace of improvements, and exactly how long we’ll have to wait for all the new Siri capabilities to land.

Image: 9to5Mac collage using background by Alexander Grey on Unsplash

FTC: We use income earning auto affiliate links. More.

Comments