The model, called SHARP, can reconstruct a photorealistic 3D scene from a single image in under a second. Here are some examples.

SHARP is just awesome

Apple has published a study titled Sharp Monocular View Synthesis in Less Than a Second, in which it details how it trained a model to reconstruct a 3D scene from a single 2D image, while keeping distances and scale consistent in real-world terms.

Here’s how Apple’s researchers present the study:

We present SHARP, an approach to photorealistic view synthesis from a single image. Given a single photograph, SHARP regresses the parameters of a 3D Gaussian representation of the depicted scene. This is done in less than a second on a standard GPU via a single feedforward pass through a neural network. The 3D Gaussian representation produced by SHARP can then be rendered in real time, yielding high-resolution photorealistic images for nearby views. The representation is metric, with absolute scale, supporting metric camera movements. Experimental results demonstrate that SHARP delivers robust zero-shot generalization across datasets. It sets a new state of the art on multiple datasets, reducing LPIPS by 25–34% and DISTS by 21–43% versus the best prior model, while lowering the synthesis time by three orders of magnitude.

In a nutshell, the model predicts a 3D representation of the scene, which can be rendered from nearby viewpoints.

A 3D Gaussian is basically a small, fuzzy blob of color and light, positioned in space. When millions of these blobs are combined, they can recreate a 3D scene that looks accurate from that specific vantage point.

To create this kind of 3D representation, most Gaussian splatting approaches require dozens or even hundreds of images of the same scene, captured from different viewpoints. Apple’s SHARP model, by contrast, is able to predict a full 3D Gaussian scene representation from a single photo in one forward pass of a neural network.

To achieve this, Apple trained SHARP on large amounts of synthetic and real-world data, enabling it to learn common patterns of depth and geometry across multiple scenes.

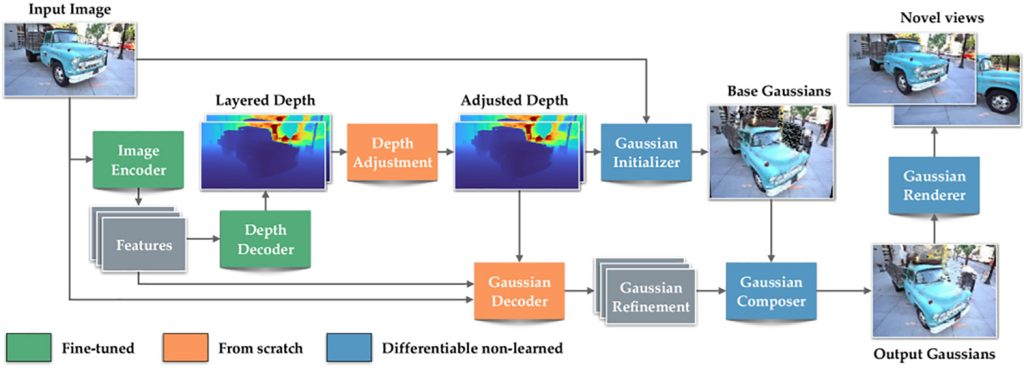

As a result, when given a new photo, the model estimates depth, refines it using what it has learned, and then predicts the position and appearance of millions of 3D Gaussians in a single pass.

This enables SHARP to reconstruct a plausible 3D scene without requiring multiple images or slow, per-scene optimization.

There is a tradeoff, however. SHARP accurately renders nearby viewpoints, rather than synthesizing entirely unseen parts of the scene. This means that users can’t stray too far from the vantage point from where the picture was taken, since the model doesn’t synthesize entirely unseen parts of the scene.

That’s how Apple keeps the model fast enough to generate the result in less than a second, as well as stable enough to create a more believable result. Here is a comparison between SHARP and Gen3C, which is one of the strongest previous methods:

Perhaps more interesting than taking Apple at its word is trying this for oneself.. To that end, Apple has made SHARP available on GitHub, and users have been sharing their own results with their tests.

Here are a few posts that X users have shared over the last few days:

You may have noticed that the last post is actually a video. That goes beyond Apple’s original scope for SHARP, and shows other ways in which this model, or at least its underlying approach, could be extended in future work.

If you decide to give SHARP a try, share the results with us in the comments below.

Accessory deals on Amazon

- AirPods Pro 3

- Beats USB-C to USB-C Woven Short Cable

- Wireless CarPlay adapter

- Logitech MX Master 4

- Apple AirTag 4 Pack

FTC: We use income earning auto affiliate links. More.

Comments