A busy time meant that my first test of the new 5x telephoto lens on the iPhone 15 Pro Max was nothing more than a quick 3x versus 5x comparison shot from my apartment as part of a first impressions piece.

Yesterday, I got the chance to see how useful the new lens is for portraits – from the purely optical bokeh seen above, to testing iOS 17’s newfound ability to automatically add depth data, letting you adjust focus after taking the photo …

All photos are unedited, straight-from-camera, except for occasional cropping and resizing for the web. Right-click and open image in new tab to view larger versions.

The 35mm equivalent of a 120mm lens is longer than would typically be used for portraiture, though I have used a 90mm lens fairly extensively for headshots in the past, and wedding photographers frequently use a 70-200mm lens on one body, whose use does often include candid portraits at longer focal lengths.

All other things being equal, the longer the lens, the shallower the depth of field. Of course, on an iPhone that is complicated by the computational photography used to create artificial background blur, but I was able to test both optical and digital bokeh.

Note: We can’t actually be certain that Apple doesn’t use digital trickery for background blur, even with depth data switched off, but the effects do at least appear natural.

Optical bokeh

With that disclaimer, let’s start by seeing how the purely optical bokeh looks. As with shorter lenses in other iPhones, this is to a significant degree dependent on how close you get to the subject. For headshots, it may not be close to a real 120mm lens on a full-frame 35mm camera, but I’d still say it works well.

First, a head-and-shoulder shot. Here, the Tower of London is on the far side of a wide road, around 400-500 feet away:

As we come in a little closer for a tighter headshot, the background blur increases (photo taken in the same place):

While this doesn’t rely on digital trickery for the background blur, the iPhone does still apply its rather high default amount of sharpening – for my tastes, somewhat over-sharpened.

What impressed me here was that it was an overcast day with the sun to the right of the camera, and without any editing it still has enough skin detail in the shadow areas.

Here are a couple more examples of just natural bokeh.

Again, the light here is to the right of, and behind, my two kind volunteers, yet the iPhone had no problem capturing skin detail without needing to boost shadows. The Just Works level of iPhone photography these days really is impressive.

Automatic depth data

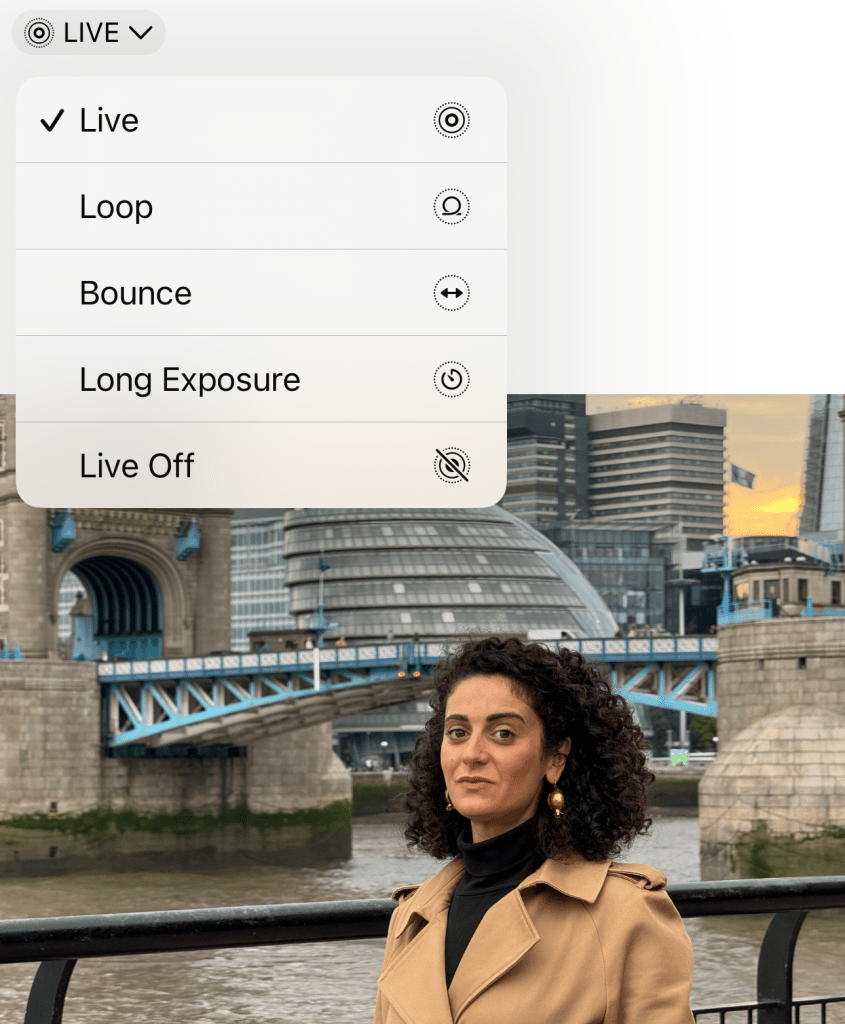

In iOS 17, the iPhone is supposed to detect human, dog, and cat faces in a photo, and automatically capture depth information that can be used to selectively blur the background when you edit the photo. As of the latest public release (iOS 17.0.2), this feature is very hit-and-miss!

For example, this photo. Super-obvious face in the shot, yet checking reveals no depth data (when there is, this menu offers the option of Portrait Mode on or off).

Yet this one, where the face is a much smaller part of the frame, no problem – depth data captured, and Portrait Mode available (and used to create artificial blur)!

This is a shot where additional background blur is really needed, but the iPhone says no:

Same here:

Come in tighter, however, and the phone is happy:

Yet here, where we are fairly tight, the faces should be really easy to detect, and we really need the feature … nope and nope:

I’m sure Apple is still working on this, and that it will improve in subsequent iOS updates.

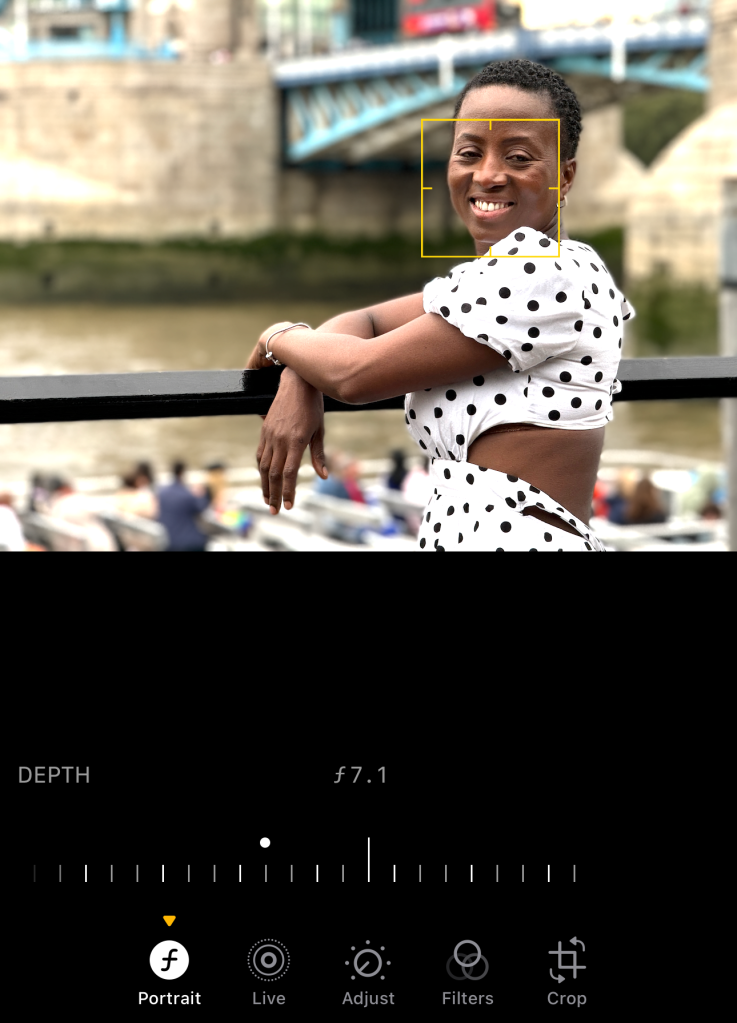

Changing the depth of field afterward

But when it works, the ability to change the apparent depth of field after taking the shot is a great feature. Check out just how different the same shot looks when varying the effective aperture.

First, f/8 (remember, this is simulating a 120mm lens, so there’s still a decent amount of background blur even at an aperture that would typically have less):

Now f/4.5:

And finally, f/1.4 (which would be one very expensive 120mm lens!):

Now, go pixel-peeping, and you can still see some tiny amount of blooming around the edges here and there, and if you look beneath Gloria’s armpit you can see that, oops, the iPhone still misses parts of the background. So no, Portrait Mode still isn’t perfect, but for the most part it’s now incredibly impressive.

Another example, this time starting at f/11:

Now f/3.5:

Finally, f/1,4:

One other weakness of Portrait Mode that persists is that, while the iPhone does often achieve beautifully natural-looking focus fall-off behind the subject, it seems to ignore examples where the foreground should also have some blur.

For example, the wall in the background falls off very realistically:

Not so the foreground. With this shallow a DoF, we should see some degree of blurring to the fingers and end cap, but here the foreground is as sharp as the face:

Live Photo or Portrait, not both

One limitation that remains is that you can have a Live Photo, to allow you to choose a different frame within the capture period, or depth data, but not both.

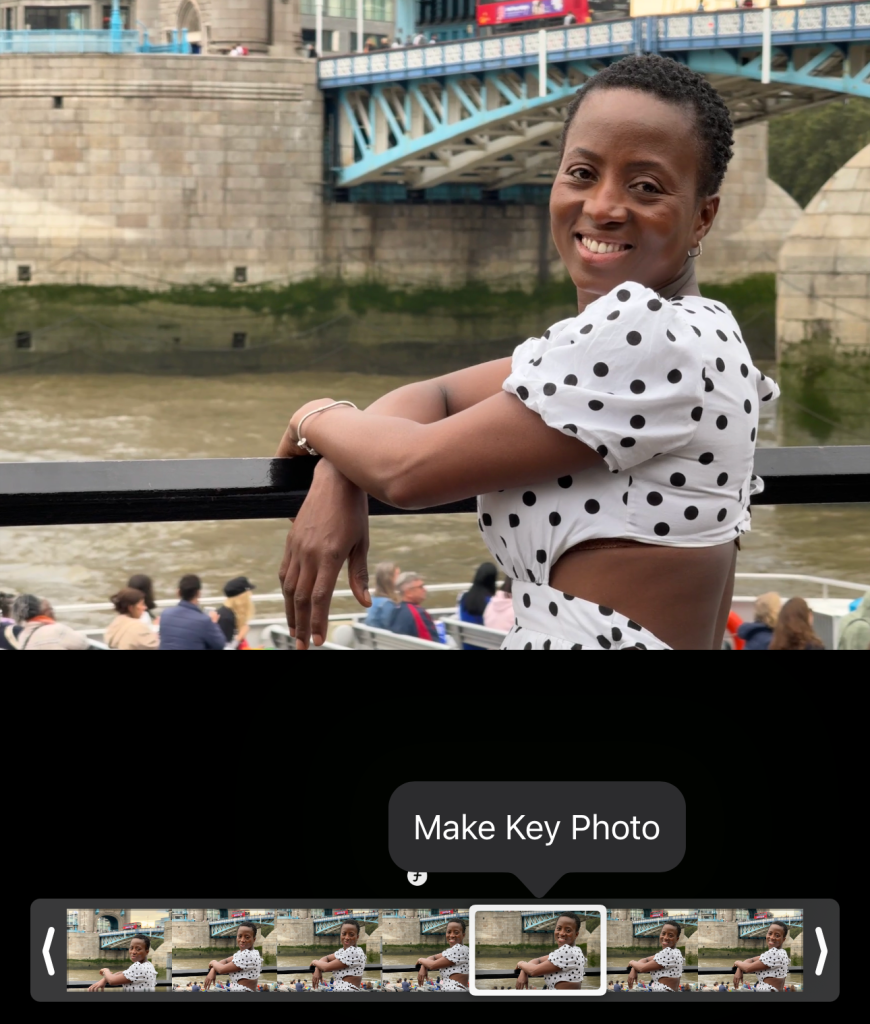

In this shot, my timing was off for the facial expression, but Live Photo can fix that:

However, if I also want to blur the background, then it ignores my key photo choice and reverts to the default frame:

Hopefully that, too, is something that can be fixed in a later update.

Noise

The other persistent issue with iPhones is that the ultrawide and telephoto lenses don’t get the same size sensor as the main camera, so their images are noisier, especially in low light. View any of the above photos at larger size, and you can see that.

For me, it’s not a dealbreaker; the noise is actually somewhat film-like. But it does mean that there is a noticeable difference in quality between the main camera and the others, and I’d love to see Apple show the other cameras the same sensor love as the main ones.

Action Mode video

Finally, while my goal last night was shooting stills – with a video test to follow – there was one video feature I couldn’t wait to test. Pro photographer and videographer Tyler Stalman highlighted a very cool use of Action Mode: using it as the ultimate digital Steadicam for filming something while walking around it.

While he used it for a statue, it immediately struck me that it could be a very cool way to shoot a person, so I put it to the test.

Now, I’m shooting really tight, handheld, no gimbal, using the equivalent of a 120mm lens, and walking around her, on bumpy grass, without any attempt at a ninja walk. Oh, and this is uploaded straight from camera – no editing software, no stabilization in post.

I have to say that the result simply blew me away! This is a super-cool effect, and I could definitely see using this in a short film. Indeed, I have one idea already …

Conclusions

Top comment by Edenber

Hi, Would like to see some examples using the Stage Light, Stage Light Mono, Stage Light Mono High Key, on the 15PM. Are those still options under Edit > Portrait > Portrait Mode Slider?

The 5x telephoto lens on the iPhone 15 Pro Max is very tight for portraiture. I’m not sure I’d use it very often. The noise levels means I would definitely be wary of doing so indoors (though I will test it).

However, it’s a great option to have. The 48MP sensor means that you can use the 2x digital zoom without losing quality over its predecessor, and there are times when a 5x zoom will get you a shot that wouldn’t otherwise be possible.

I’ll be testing it also for cityscapes, and I think that’s a more realistic use for me – watch this space.

What are your impressions of the iPhone Pro Max 5x telephoto lens? Please share your thoughts in the comments, and links to sample photos would be great!

- iPhone 15 Pro Max takes top video spot, second overall in DXOMARK camera test

- iPhone 15 Pro camera review: Here’s why Stalman calls it ‘the most professional iPhone’

- iPhone 15 Pro Max vs. DSLR photos: Real-world camera comparison

FTC: We use income earning auto affiliate links. More.

Comments