I have been using Apple’s new Visual Intelligence feature since the moment iOS 18.2 Developer Beta released a few weeks back. At first, I thought it would be one of those features that would just be cool to show off to friends but that I would not actually use. But as the days and weeks went by, I found myself using it multiple times in a multitude of situations. Here are some of the best ways to use Visual Intelligences, and I will even show you how to use it on devices that don’t have Camera Control! Let’s get into it.

Be sure to check out our video below to see all these use cases in action and I even show an additional five use cases that will resonate.

Lastly, a quick reminder. This is still in developer and public beta as of writing this article. So if you are on iOS 18.1, this feature will not be available yet. iOS 18.2 should be released to the public in mid-December.

How to enable Visual Intelligence

Weirdly enough there are no dedicated settings for this feature. You get a prompt when you first update to iOS 18.2, showing you how to enable it. But then thats it. Technically this only works on iPhone 16 models necuase you need a Camera control button to enable it. All you need to do is long press the button and then you are brought into Visual Intelligence.

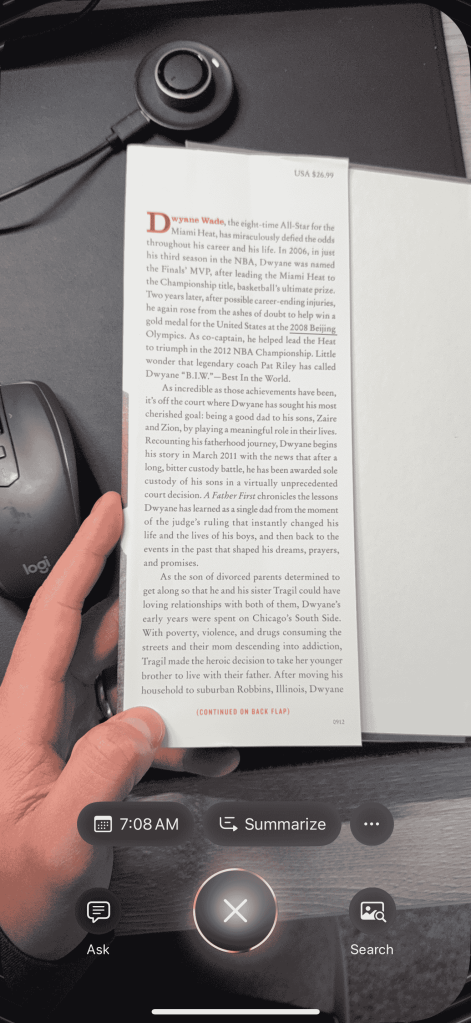

1. Summarizing & reading books aloud

One of the best use cases I have found is that you can use Visual Intelligence to read something aloud for you, no matter the text. I found this extremely useful for books. This could also somewhat replace audiobooks in certain situations. It is very simple to use:

- Open up Visual Intelligence

- Aim phone at a text you want summarized or read to you

- Your iPhone will see that it is text and as if you want it summarized or read aloud

- Select option

- Sit back and relax

As someone who needs an audio book and the physical book to follow along this is amazing. I can just snap a picture of one page and have Siri read it aloud to me. Give this a try with any text from contracts, to instructions, to books. It’s extremely helpful.

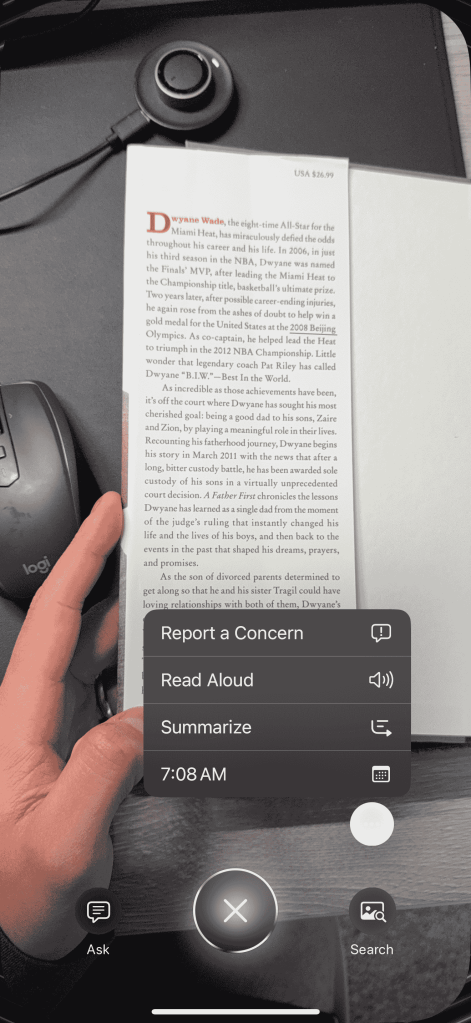

2. Reverse Google image search

One of the main options and functions of Visual Intelligence is going to be the search funciton. This function has been around with other phones and applications for a while, but its great that it is now a default feature for iOS. You can take an Visual Intelligence picture of anything and then it will give you two options: Ask and Search. For the reverse image search, just tap on the Search option and it will then find similar things on google. Here you can see I took an image of an iPhone and it found iPhones near me that I could purchase. But this literally works with almost everything you look for!

3. ChatGPT Ask feature

As I mentioned above, there are two main functions that are always going to be available when using Visual Intelligence: Ask and Search. We already covered Search, so what is Ask? This is where ChatGPT comes into play. If you snap a Visual Intelligence and tap the Ask option it will upload that image to ChatGPT and let it interpret what it sees. This works with anything and everything. It is also surprisingly amazing at getting a bunch of small details correct. One thing I did notice is that it tends to avoid specfic types of IP like cartoon characters. But it will still describe them and get you as much information without saying the character’s name.

4. Realtime business information

Apple is bringing the augmented reality experience with this feature in Visual Intelligence. You can now just pull up Visual Intelligence, point it at a business, and you will get all the information you need. You don’t even need to actually snap the image; just point your Visual Intelligence camera at it, and it will start to work its magic. The example I used in my video was a local coffee shop. I pointed my phone at the coffee shop, and it was recognized immediately. The Apple Maps listing shows up as well as other options like:

- Call

- Menu

- Order

- Hours

- Yelp rating

- images

This is all smartly recognized and you dont need to fumble through menus. Just point your phone and everything is at your fingertips.

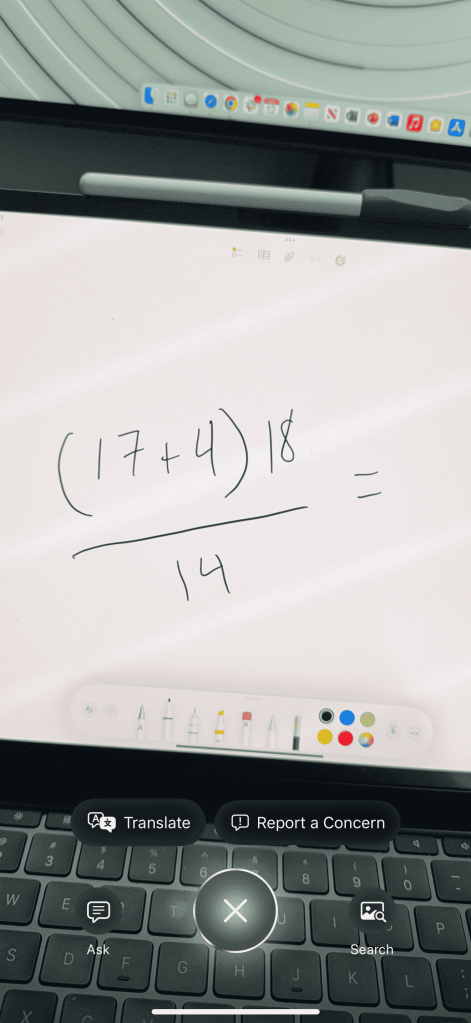

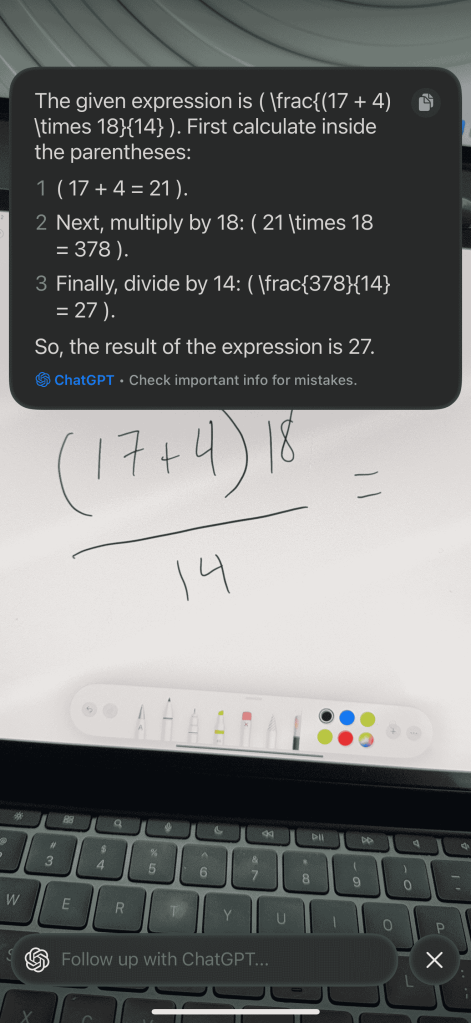

5. Problem solving

This is one of those features I wish I had back in 2012 while I was in calculus class. You can take a Visual Intelligence image of any math problem and then ask ChatGPT to solve it. You get the step by step way of solving these equations or problems. I remember spending so long with geometry proofs or always having to show your work to get something solved. Now we can just take a picture and Siri will do the rest.

Final take

As I mentioned, all of these features are still in beta so it continues to learn and get better as time goes on. But it’s amazing that this is now built into the native OS and we don’t have to use other applications to get this type of use case done. I have been using Visual Intelligence more and more as the days go on, in both my personal and proffessional life. The most insane thing is that this is the worst that it will ever be and it will only get better!

Be sure to watch our video here to get even more use cases and see what it’s really like to use these new features. What do you think of Visual Intelligence? Is it something you would use? Have you installed the Beta on your devices? Let’s discuss in the comments below!

FTC: We use income earning auto affiliate links. More.

Comments