When I tried Ray-Ban Meta glasses last week, I described the AI features as largely a gimmick at this point, but still making me feel excited for a future Apple Glasses product.

Several commenters noted that the glasses do somewhat better when given follow-up questions, and I tried out this feature on another walk, to reveal a mix of hits and misses. But what’s really missing at present is integration …

The glasses have no access to the GPS of the connected iPhone, so can’t use location as a clue. They don’t have access to third-party apps, so can’t pull data from those for things like public transit. What we really need here is a fully connected ecosystem – and I can think of a company that specialises in that …

The long-term AI dream

I’ve written before about my long-term dream for a fully-connected Siri. Almost a decade ago, I dreamed of being able to give it an instruction like “Arrange lunch with Sam next week” and leave it to figure out all the details.

Siri knows who Sam is, so that bit’s fine. It has access to my calendar, so knows when I have free lunch slots. Next, it needs to know when Sam has free lunch slots.

This shouldn’t be complicated. Microsoft Outlook has for years offered delegated access to calendars, where work colleagues are allowed to check each other’s diaries at the free/busy level, and authorized people are allowed to add appointments. So what we need here is the iCloud equivalent.

My iPhone checks Sam’s iCloud calendar for free lunch slots and matches them with mine. It finds we’re both free on Wednesday so schedules the lunch.

And it could do even more. My iPhone could easily note my favourite eateries, and Sam’s, and find one we both like. It could then go online to the restaurant’s reservation system to make the booking.

OpenAI just gave us another look at the future of AI in the form of GPT-4o, a version of ChatGPT which can use our iPhone cameras to see what’s around us (as well as Screen Recorder to see what’s on our screen in other apps).

That ability to see around us is, of course, much more conveniently done by smart glasses. We don’t need to hold up our phone, we just ask our glasses.

Asking Meta glasses follow-up questions

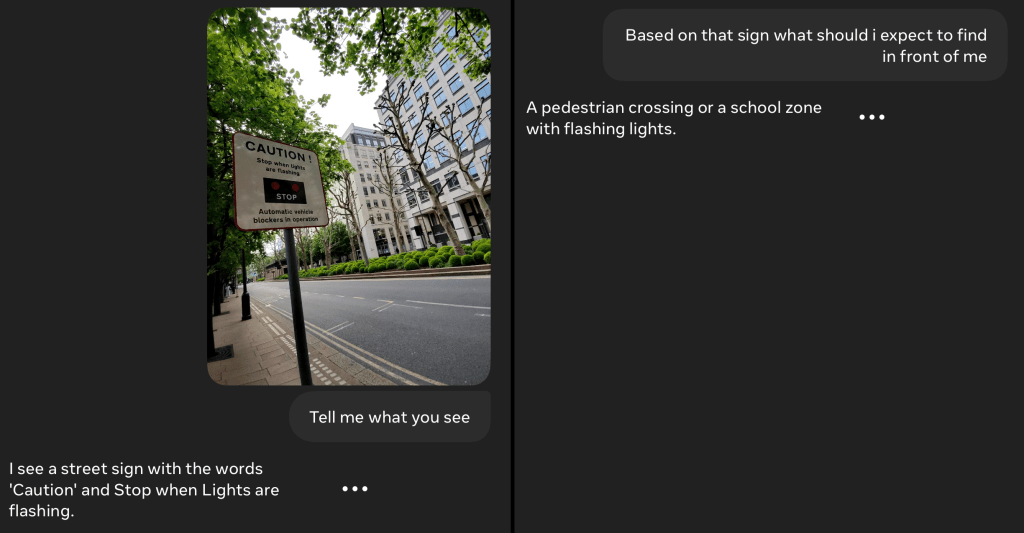

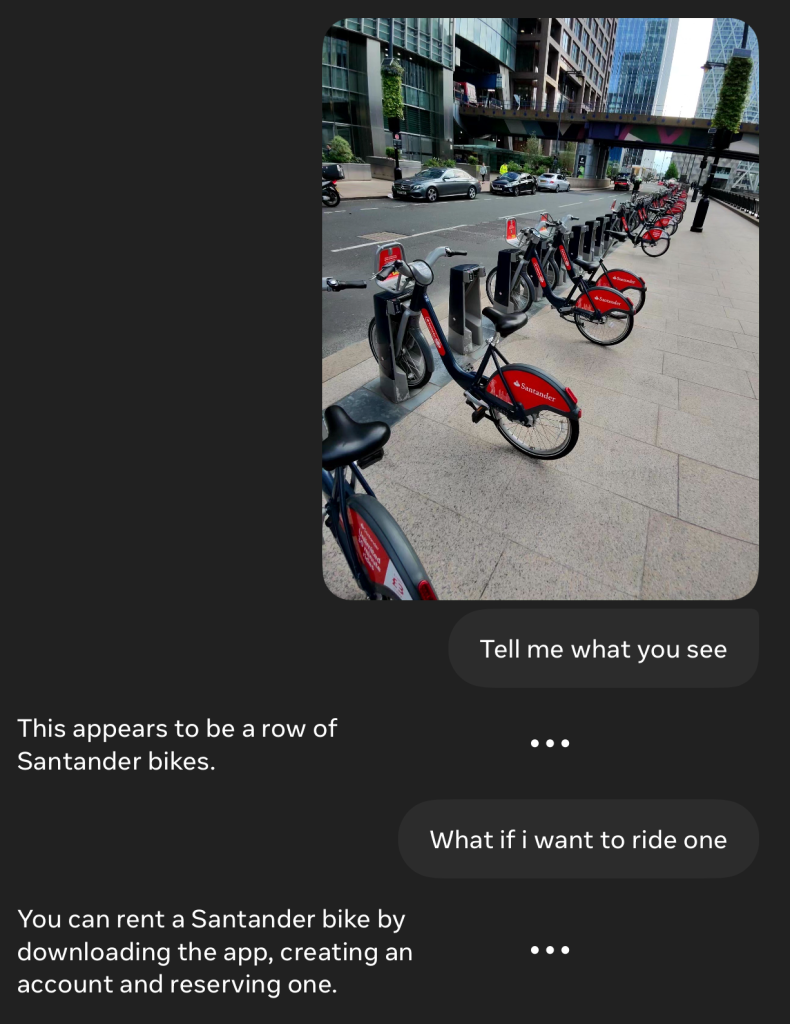

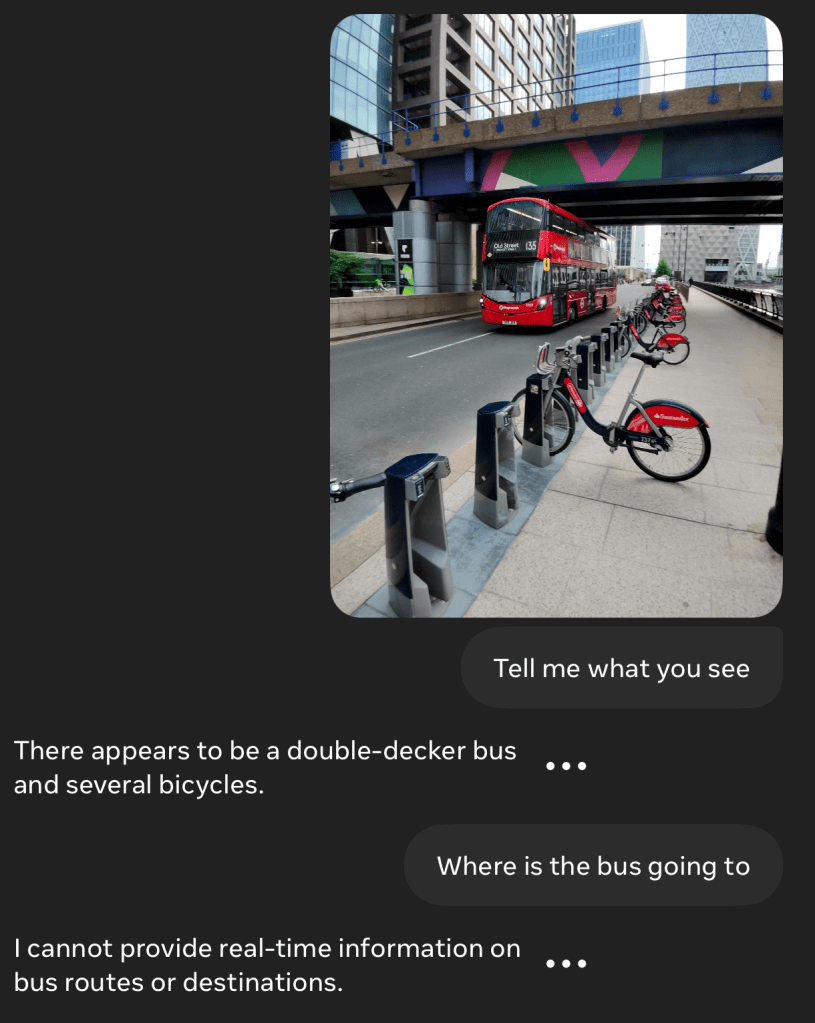

I noted that Ray-Ban Meta glasses gave me mostly generic information about what was around me, with just a few exceptions. Asking follow-up questions revealed a mix of impressive and unimpressive results – as well as some which simply point to features which will exist, but don’t yet.

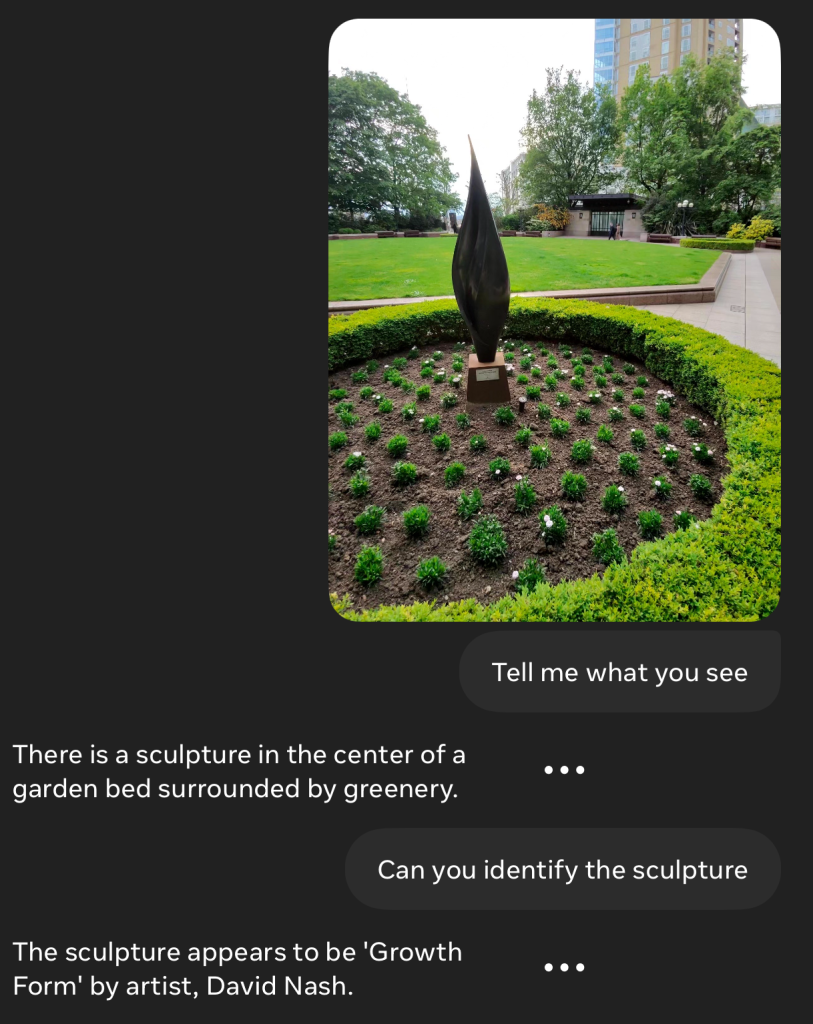

This example was incredibly impressive, especially as I’m sure the sign was too small for it to see the text, and it didn’t even know which city we were in:

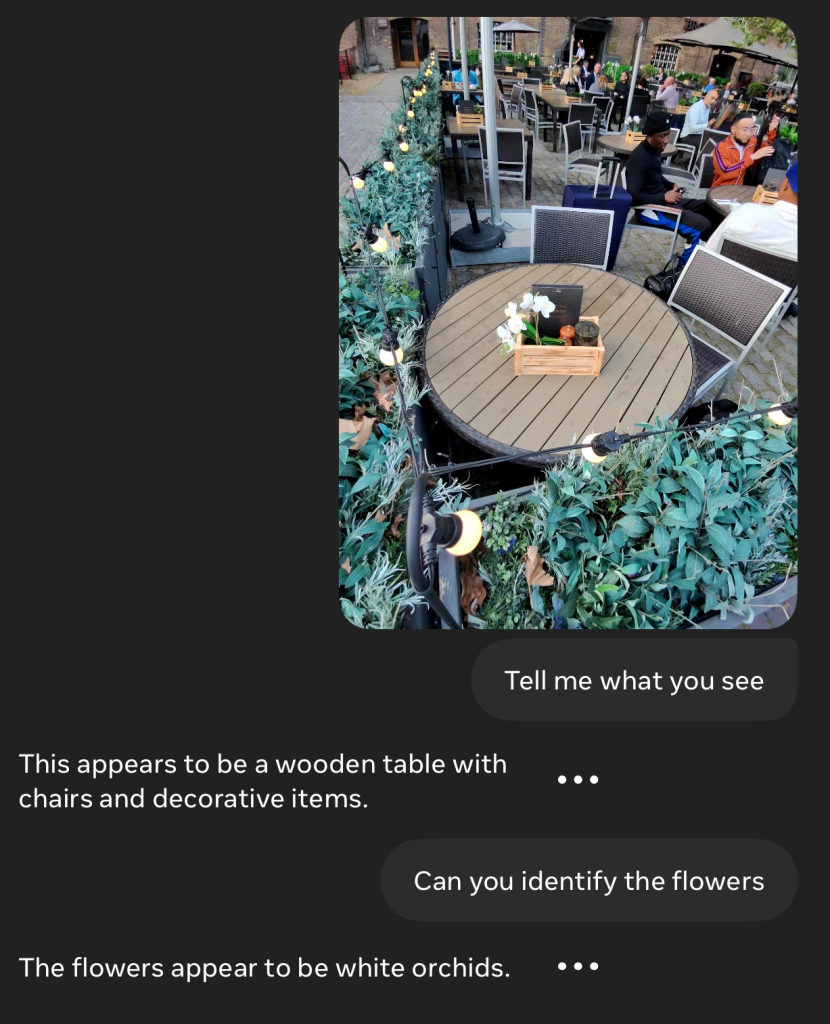

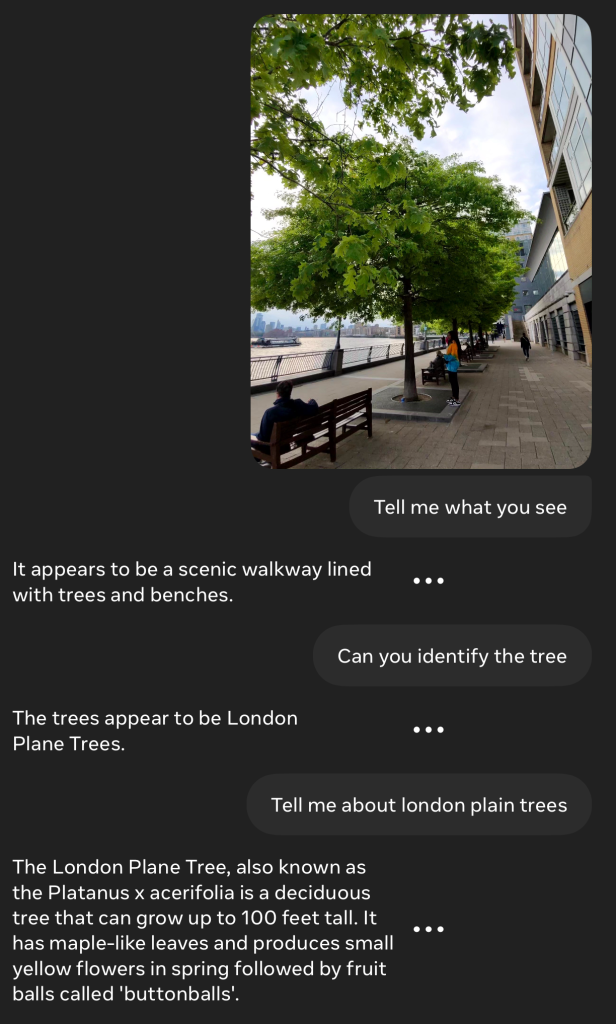

I noted last time that they didn’t specifically identify plants, but some follow-ups reveal that they can sometimes be good at this.

Also impressive:

Not a bad guess here:

It also did reasonably well with this follow-up, though again integration will be key here – it would ideally then be able to download/open the app on my phone.

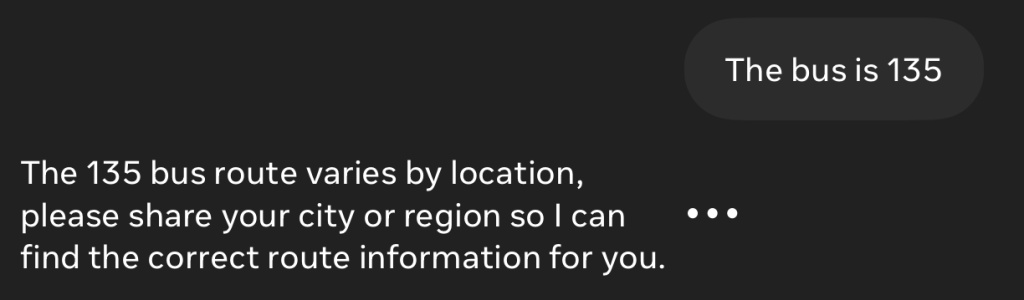

The next example really demonstrates the need for everything working together. I showed it a bus, and asked it where the bus was going. It ought to be able to read the destination board and number, and I have CityMapper on my phone, but this exchange was painful!

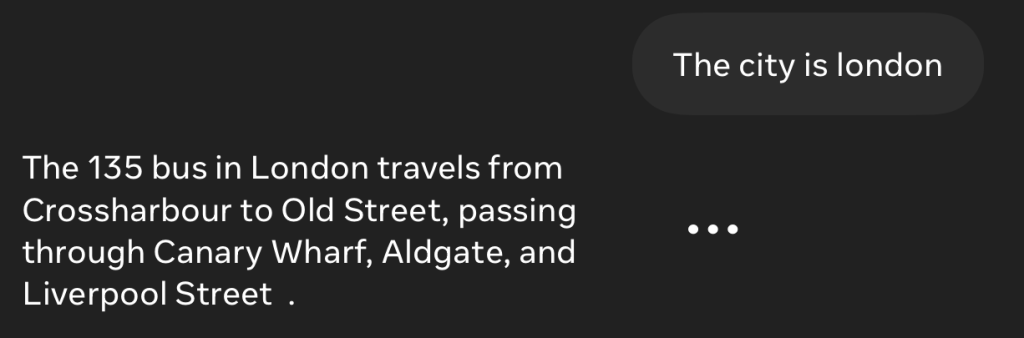

I told it the bus route was 135 – but because it has no access to the iPhone GPS, it didn’t even know it was in London.

Finally, when I told it we were in London, it got there!

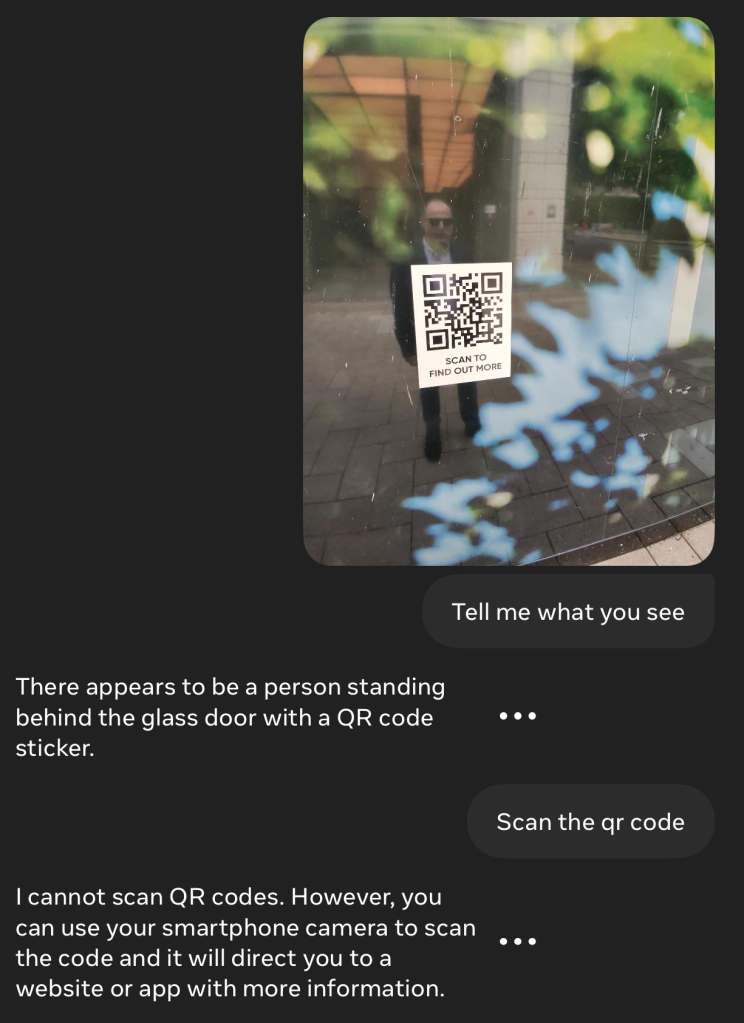

It can’t scan QR codes (I’ll forgive it mistaking my reflection for a person behind a door).

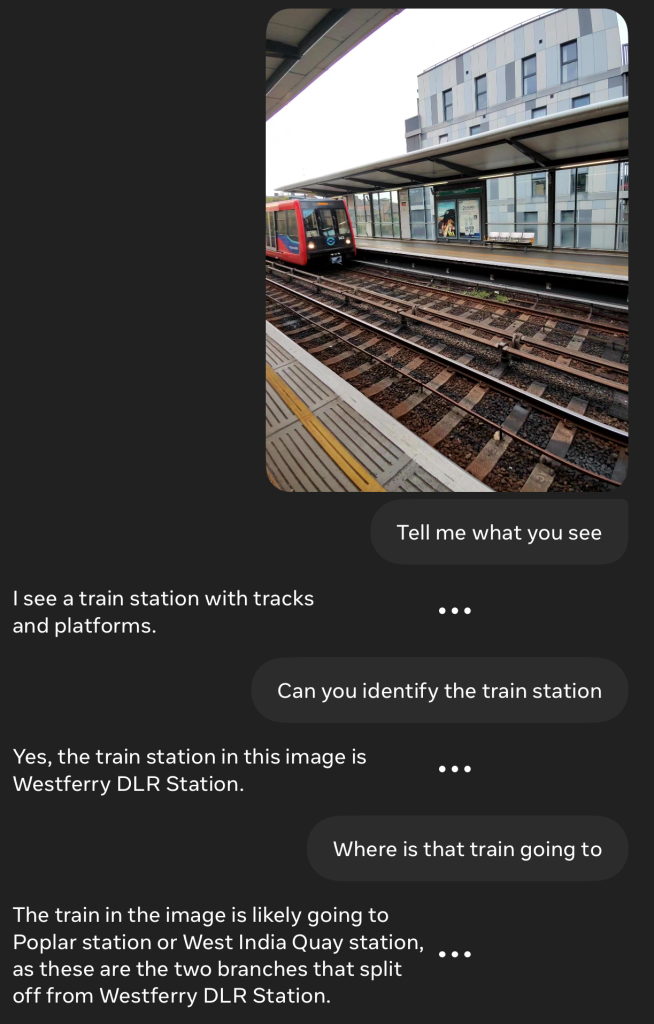

Finally, this isn’t bad without GPS and apparently recognizing the station visually (maybe by reading the sign, but I suspect not as it doesn’t usually read text unless pretty close-up):

It identified the possible next stops, rather than the destination. But with access to CityMapper or similar, it would have been able to give a much more useful answer.

So what’s missing here is Apple’s speciality

Meta is using Llama 2. OpenAI just raised the stakes with GPT-4o. And Apple is expected to use some mix of OpenAI and its own AI engine.

All of this is great news. AI is already developing at a phenomenal pace, and competition between companies will only accelerate the speed of improvements. Apple joining in would be good news anyway.

But imagine Apple bringing its trademark ecosystem approach to the table. Hardware and software working together. Integrating different devices to use all of their capabilities. That’s what’s needed to really transform this kind of technology. I can’t wait to see what Apple does with it.

Photo: Meta

FTC: We use income earning auto affiliate links. More.

Comments